X

26 Dec 2019This is X.

This article is an entry in the 2019 F# Advent Calendar in English. Thanks to Sergey Tihon for organizing it, as always!

The F# community, while small compared to those of many other languages, is known to be exceedingly open and helpful to beginners. I experienced this myself when I started learning the language nearly six years ago, and people (especially the F# MVPs) were always quick and eager to answer my newbie questions on Twitter, or wrote extensive answers on Stack Overflow or Code Review. Having had this experience early on has caused me and others to try and make the start easier for those who come after us where we can, be it on the aforementioned platforms or for example in the mentorship program run by the F# Software Foundation.

Another platform with the specific aim of providing mentoring for learning programming languages (F# is one of over 50 language “tracks” at this point, from assembly and Bash to Haskell and Prolog) that I’ve been involved with over the past eight months is Exercism.

The concept of Exercism has two pillars - test driven development, and dedicated feedback from people with experience in the specific language you are trying to learn, whom the site calls “mentors”.

You download an exercise via the command line client - for F#, that gives you a .NET Core project with two files, one of which contains unit tests, and the other is the file in which you implement your solutions. The tests start out mostly skipped; the idea is to implement enough of the solution to get one test to pass, and then un-skip the next test and make that pass in turn. There’s a lot of similarity here to the well-known concept of “katas”, except that writing the tests is not part of the exercise, as they already exist.

When all the tests pass, you can submit the solution through the client, and can then request feedback from a mentor. One of the mentors will then pick the solution from the queue and make suggestions for improvement; you can then update your solution and submit a new iteration, and then either get more suggestions for another round, or the mentor will “approve” your solution, which you can then publish on your profile and move on to the next exercise.

If you are going through the language track in “mentored mode”, and the exercise was one of the “core” exercises, there will also be a new core exercise and probably several “side” exercises unlocked. (The other mode is “practice mode”, where all exercises are available immediately, and you can tackle them in any order; you will still be able to request mentor feedback in this mode.)

My task as a mentor is to help the students take their solution from working code to an idiomatic, readable and maintainable implementation by suggesting improvements. These can be more cosmetic things, such as clearer naming or more readable formatting, changes around the usage of various language constructs, types or core library functions, or sometimes a completely new approach if a solution is very imperative or, in the other extreme, goes overboard with functional programming gimmicks.

The idea there of course is not to just give people finished code and say “this is what you should have done”. Instead, I will point out things that could be improved, explain why I think that is, and often mention specific language concepts (say, Active Patterns) or functions in the core library to consider. There is also no point in students just writing what I tell them to get me to approve a solution - the goal is always to help people get a better understanding of the language and build habits for writing better F# code. I have been reading Kit Eason’s “Stylish F#” recently, and this early paragraph sums up my idea of mentoring perfectly:

This paragraph from @kitlovesfsharp's "Stylish F#" describes exactly the everyday challenge of mentoring on @exercism_io - giving people suggestions and the reasoning behind them. I say "consider doing x" a lot and should probably do it a lot more.

— Тэ дрэвэт утвикλэрэн (@TeaDrivenDev) December 6, 2019

In that spirit, I aim to let students keep as many of their own ideas as possible, even if the result is not the exact code I would have written. A handful of the simpler exercises don’t leave a lot of room for variation, and there’s exactly one good solution, sometimes down to the letter. For many, the idea will always be more or less the same, but details of the implementation can vary, and for the more complex exercises, pretty much every solution will be unique to that student, from the initial attempt through the entire trail of revisions.

The students on the F# track come from all kinds of different programming backgrounds (where I can tell). Of course, a lot of them have experience with C#, but many don’t know .NET at all, and every now and then, there’s someone who’s well-versed in functional programming in languages such as Haskell or Lisp.

Consequently, students’ approaches and attitude can vary greatly. Often people will have a hunch that their solution is not optimal, but lack the knowledge about certain language features (such as function composition, or destructuring tuples instead of using fst and snd) or functionality available in the core library (like the functions in the Option module to avoid pattern matching on options). Some will naturally reach for mutable values or data structures, because it’s what they know (that doesn’t happen all that much, though). And those that already know functional programming will try to work around F#’s “inadequacies” regarding certain higher functional concepts (and have me stumped when they explain to me what they’re trying to achieve - with a Lisp example).

Related to that, every student will have their own specific target regarding how “good” they want the code to be. Many are content with achieving the minimum solution that will make me approve the code, while others will also implement additional suggestions or ask about was to improve things they think may not be great (as mentioned above). And occasionally, a student will just tinker on their own for a few days and and submit a number of iterations with all the ideas they tried. This is all of course dependent on the individual student’s general programming proficiency - more experienced programmers will more easily delve deeper when learning a new language.

Different students also behave very differently in terms of communication. Many never write anything in the comments area unless they’re really stuck and have to ask to get unblocked. Those typically seem to be people who are generally more experienced with programming and can work just with the hints I give them. Less experienced programmers of course ask more questions, because they may need more guidance to understand certain concepts. Others are just very interested about the background of various things, how things are implemented under the covers, or are concerned about performance.

Depending on the student’s needs and understanding, and the specific exercise, the time I need to invest also varies widely. For some simple exercises, I usually don’t even run the tests anymore - I know what a good, working solution looks like, and I know how to get there from various other states, and many for many things I have the answers or suggestions in a text file I’ve built up over time (because I don’t want to write the same sentence suggesting Option.defaultValue 200 times; it also helps with being consistent in my answers).

For more complicated exercises, or when students ask very specific questions, the effort will be greater, sometimes by a lot - I try every improvement I want to suggest for a student’s code myself to make sure it actually works and makes sense, and occasionally I’ll even dive into the FSharp.Core source code for some background information (and everyone who’s seen that knows it can be a confusing place). In rare cases, a single reply will take me half an hour or more to work out, and while most solutions go through no more than five iterations (and many are approved sooner), every now and then one will reach ten or more.

Now, all that can be a significant time investment at times, but it’s definitely worth it. One aspect is simply giving back to the F# community as mentioned and giving others an easier start. Another one is that teaching something is an important way to get better at it oneself, for a few reasons:

One bigger thing I need to mention here specifically: The exercise “Tree Building” is about refactoring working code to a more idiomatic solution, and as the instructions also mentioned it was “slow”, I tried to check solutions for performance as well, but did not really have a way to do that. When I mentored Scott Hutchinson’s solution, we got talking about that, and Scott suggested using BenchmarkDotNet, which I had no experience with.

With some sample code from Scott I set up benchmarking for the exercise for myself to be able to really judge students’ submissions in that regard, comparing them with the original code, the fastest solution I could find (which incidentally was Scott’s at the time), and mine (just out of interest). Then, to actually be able to tell students what they could to do improve the performance of their solutions, I needed to actually have made it faster myself - incidentally my own solution at that point was still fairly slow (and in particular allocated a lot of memory), so I didn’t have to look far. As I knew Scotts code was fast, I looked at that for inspiration - and with a few changes “accidentally” made mine not only faster than it was before, but even slightly faster than his (which probably mostly comes down to slightly lower memory allocations).

Now, while that helped me for mentoring, students still wouldn’t have a way to know how much faster or slower any changes made their code, which of course wasn’t ideal. I suggested officially adding benchmarking to the exercise and make the performance aspect an official consideration, an idea that Erik Schierboom, the maintainer of the F# track (among many other things at Exercism), was very excited about, and I eventually sent a pull request to implement the suggestion.

If you’re just starting to learn F# (or any of the 50 other languages), or if you want to improve your fluency in the language, have a look at Exercism. There are over 100 F# exercises covering pretty much any language feature (short of type providers), so you can keep yourself (and us) busy for a while.

And if you think you don’t need the practice, or at least not the mentoring, consider becoming a mentor; we’ve cleaned up the queue quite well in recent weeks, but we could do with one or two more active mentors who can spare an hour two or three times a week, to even out the wait times for students a bit more.

This article is an entry in the 2016 F# Advent Calendar in English.

First, I need to apologize for being so late with my contribution. I had actually booked a slot on December 20th, but my originally chosen topic turned out to be a very bad fit for a written article (I'd like to do that as a video at some point, though), so I had to start over with something else, which also proved very difficult to write for some reason, but here we go....

Chances are that you've come to this article from the 2016 F# Advent Calendar overview on Sergey Tihon's blog, where the full schedule is presented in a table showing the already written articles as well as the dates and authors for the remaining ones, so you get a full overview of the whole event.

One thing that this doesn't facilitate (as inherent in the medium) is keeping track of which articles you have or haven't read. What would be nice for that is an RSS feed, but we don't have that. We have all the information that we need, though, to build one ourselves - using F#, of course.

This will be a rather low-tech solution - we run a script that generates the XML file for the RSS feed, and then we put that somewhere on the Internet. That means there is some manual work involved once a day or so, but that way we only need somewhere to put a static file (I'm going to use GitHub Pages). This manual step can be eliminated if there's a place available to run a small webservice that checks the overview a few times a day and regenerates the feed on the server as needed.

We will use the HTML type provider from FSharp.Data to deal with the HTML. So we add the FSharp.Data package with Paket, reference the DLL in a new .fsx script file and create our Page type from the type provider.

1: 2: 3: 4: 5: 6: 7: 8: |

|

Note that this does not constrain us to processing that one specific page. As long as the structure is what we expect, we can still pass in a different page later.

If we look at the HTML for a single post, that is a single row in the table, we find this:

1: 2: 3: 4: 5: |

|

What we need from this is the date, the author's name, the post title and the post URL, all of which are easy enough to obtain. We'll keep them in this small F# record:

1:

|

|

However, before we start implementing this, we'll have a look at a special case from 2015:

1: 2: 3: 4: 5: 6: 7: 8: 9: |

|

Scott had so much content (as usual, one might say) that a single post would have gotten far, far too long, so he published several installments, all of which are listed for his one calendar entry. The proper way to handle that in our feed is probably creating an entry for each installment, so we need to be able to extract all the links and then treat them as if they were separate rows.

There are also rows in the table that we want to ignore - the header row - entry rows without actual links to articles, either because they're simply in the future, and the articles don't exist yet, or because they contain a "coming soon" notice, like the one for this post at the time of writing

If we look at a row, it's always a <tr> node containing three <td> nodes, the first of which is the date as simple text, the second the author's name in a link to the tweet or message announcing their participation, and the third contains the article links as <a> tags, if there are any.

A delay notice in the third column starts with a <span> tag to change the text color:

1: 2: 3: 4: 5: |

|

We'll read the content of the cells with Active Patterns, because F# programmers love Active Patterns (I know I do).

One of the happiest moments for an F# programmer is looking at a problem and thinking "Active. F#%ing. Patterns."

— Тэ дрэвэт утвикλэрэн (@TeaDrivenDev) May 6, 2016

And they actually make things nice and clear in this case, as we'll see.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: 30: 31: 32: 33: 34: 35: 36: 37: 38: 39: 40: 41: 42: 43: 44: 45: 46: 47: 48: 49: 50: 51: 52: 53: 54: 55: 56: 57: |

|

Let's look what that gets us.

1: 2: 3: 4: |

|

Now that we have all the information, we can reassemble it into the RSS feed.

The basic XML for an RSS feed looks like this:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: |

|

Inside the channel tag, there will be the individual items, each looking like this:

1: 2: 3: 4: 5: 6: |

|

We could use format strings here, but as I don't feel like dealing with multiline strings, it's going to be XLINQ.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: 30: 31: 32: 33: 34: 35: 36: 37: 38: 39: 40: 41: 42: 43: 44: 45: 46: 47: 48: 49: 50: 51: 52: 53: 54: 55: 56: 57: 58: 59: 60: 61: 62: 63: 64: 65: 66: 67: |

|

That was all pretty straightforward. Now that we can create the feed XML, we only need to write the actual XML file to disk.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: |

|

Now that we have created the RSS file(s), the only thing left is publishing it. Depending on where we do that, it might require different steps, but for the typical things like FTP upload or publishing via Git, FAKE makes that easy anyway. I'm putting the feed on my GitHub Pages site, so we need to commit it to Git. We use Paket to add FakeLib to our project, then reference it in our script, and add the calls to stage and commit the RSS file(s). We could also push to GitHub from here, but I prefer to do that by hand.

1: 2: 3: 4: 5: 6: |

|

That's it, essentially. For the (in this case short remaining) duration of the F# Advent Calendar, we run this once a day or so and publish the updated feed to GitHub.

This script can process the overview pages for 2015 and 2016; the one for 2014 is a little different and would need special treatment.

There is one very annoying problem that we are going to have with the feed specifically if (as with me) Feedly is being used as the RSS aggregator. When subscribing to a feed, Feedly only pulls the first 10 items (which in our case are the latest ones, since we've reversed the order). If we're adding feed with over 50 entries at once now, we'd lose most of the articles. There is a workaround, though, but it requires control over publishing the feed: Updating a feed in Feedly appears to have no limit on the number of items. That means if we initially create the feed with only 10 items, publish it and add it to Feedly, and only then generate the rest of the items and publish again, Feedly will correctly pick up all the entries. So for the first time creating the feed, we need a slightly modified version of the createFeed function:

1: 2: 3: 4: 5: 6: 7: 8: |

|

For all subsequent generation runs, we switch back to the original function without the Seq.truncate call.

There's still one issue with that solution that I haven't been able to fix, though: Feedly will only give those first 10 entries their actual pubDate values from the RSS file, and date all others as they arrive. That means that in the case of 2016, we'll get correctly dated entries until about the beginning of December, and after that, there will be 40+ articles with the date when we first publish the full feed. But as we have the date in the title for each entry, and the specific dates generally shouldn't have any relevance for the articles' content, that's probably something we can live with.

The 2016 feed is at https://teadrivendev.github.io/public/fsadvent2016.rss; I will update it for the few remaining days of the 2016 calendar. So in case you find this useful (and don't use Feedly), you can just use that.

The raw script code from this article can be found in this gist.

Everyone who has used F# pattern matching knows how powerful it is, but interestingly it is often overlooked that all the different aspects are always equally applicable.

Destructuring tuples is something every F# programmer is probably well versed in in all its forms:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: |

|

....you get the picture. Did I forget something important?

Now, in all those cases (there may be rare exceptions that I'm not presently aware of), you can also use the other forms of pattern matching, such as Active Patterns.

Yes, you can use Active Patterns in function signatures.

You can also use a type test in a for loop:

1:

|

|

(The compiler will notify you that there might be unmatched cases that would then be ignored - so this works like LINQ's .OfType<'T>().)

Something that seems to be a little less well known is pattern matching on the fields of record types, both in the form of destructuring assignments and actual comparison matches, but that works just as well in all those cases. The syntax for it is the same as if you were constructing a record of the respective type, except that it is not required to use all the fields of the type.

Let's say we have turned the above name tuple into a a proper type:

1:

|

|

We can use this now in all the ways we used the tuple above:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: |

|

Now, while this is pretty neat, I don't find myself using it a lot, which is in part due to the fact that when for example matching the argument of a lambda this way, you lose the reference to the record itself.

When I wrote this on Twitter, Shane Charles had the right answer: You can always additionally bind the original value to a name with the as keyword. Always.

The most common use of this concept in pattern matching are probably type tests:

1: 2: 3: 4: 5: |

|

But this really works pretty much everywhere (even though it might not always make sense to use it):

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: |

|

That last one again gives us a compiler warning about incomplete matches, but anyone not from the Syme family will simply be ignored.

By the way, you can of course also use as with Active Patterns:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: |

|

While this is a bit nonsensical, it demonstrates the point: The fizzBuzz function has type int -> string * int, and while the signature does not actually give a reference to the integer argument, we can still obtain it from the match expression.

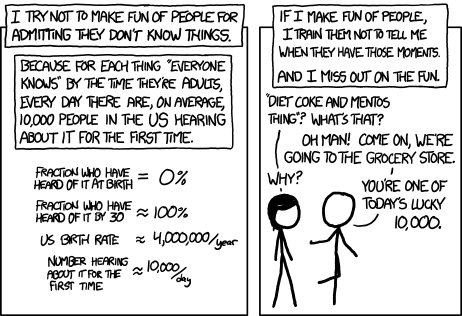

Did you know about pattern matching on record fields? If you didn't, you too (along with Reid Evans and John Azariah) are now one of today's 10.000!

(via XKCD)

Written on my HP ZBook with Ionide and Visual Studio Code

This post is one of the December 11th entries in the English language 2015 F# Advent Calendar.

As much as we like our software to be fast, and although computers get ever more powerful and paralleloriz0red, still not everything can happen instantly. There are long-running calculations, file I/O, network operations, Thread.Sleep and other things that require the user to wait for their completion before they can use the result in any way.

It is considered common courtesy these days to not just let the application stall until an operation is completed and expect patience and understanding from the user; instead we'd like to let them know that something is happening and there is still hope that this "happening" will be over in a finite amount of time. And if we have any way of telling at which point of the process we are, we'll also want to pass that information on to the user, so they can plan the rest of their day accordingly.

The overall premise for what we're doing here is that a) we have a desktop application, say in WPF, and b) the "result" of our long-running operation is a state change in the application. The latter isn't very functional, because seen from our operation, it is a side effect, but that is how .NET desktop applications usually work.

First we define a basic type to describe our concept of "busy":

1: 2: 3: 4: 5: 6: 7: |

|

The implementation of IBusy will likely be something like a ViewModel, which will then take care of displaying a progress bar, a text control with the busy message and maybe some kind of overlay to lock down the application until our operation is complete - if we're loading new data for example, it probably makes no sense to let the user work in the application in the mean time. When we're done, we set IsBusy to false, and the application can be used again.

Using this might look like this:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: |

|

This is of course the most naive way possible, and it requires doing everything by hand correctly every time we want to do something "busy".

That will get annoying pretty quickly, so we'll put the whole IBusy handling in a function and then pass it the operation we want to execute:

1: 2: 3: 4: 5: 6: 7: 8: |

|

That's better - it takes all the manual work from us. We only need to say "do operation while telling the user you're busy, and when the operation is through, signal the user you're done". This is nice and declarative and really the minimum work we can have with this.

But.... it doesn't work. Well, it does, but not as we'd like it to. I said above that we want to "not just let the application stall until an operation is completed and expect patience and understanding from the user" - but that is what will happen. Why? Because we're just running everything on the current thread, which in a desktop application will usually be the UI thread.

If we're in WPF, chances are the thread will be blocked by operation before it has even managed to properly show the "busy" indication. And even if the user sees that, the whole application will now be frozen; the Windows task manager might even say "not responding", until at some point our operation completes and unblocks the thread. That is not very friendly.

There are a number of ways to solve this in .NET, from "manual" threading control and BackgroundWorker to Tasks and C#'s async/await - and in F# we have the nicest of them all: asynchronous workflows. They all work a bit differently, and not with all of them, what we want to do is straightforward to achieve - with manual threading control via the Thread class for example, it would be quite an ordeal (but then, pretty much everything is).

One thing that is different with F# async workflows from, say, BackgroundWorker or async/await is that we have to explicitly switch to a background thread, because it doesn't happen automatically. The upside is that we have that control, unlike the other implementations.

Let's look at a doBusyBackground implementation of our function:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: |

|

As I said, we have control over which thread we're running on, and we have to exert it; that's why we first save the current synchronization context and restore it later on.

Note that even though our operation function may have no asynchronous aspects in itself, we have to call it in an asynchronous workflow to have it executed on the thread we switched to on the Async class.

But look at how easy that was! We just wrap something in an async { } block (some people claim it's a monad, but I refuse to accept that), and we can "await" it on a non-blocking fashion, which is what the do! accomplishes. It needs to be said that in this case operation has the type unit -> unit, that means it is a completely self-contained effectful operation and returns no useful value. We will later see that we can just as well return values of any type from asynchronous workflows.

Async.StartImmediate is of type Async<unit> -> unit, that means it takes an asynchronous operation and runs it in a "fire-and-forget" fashion while itself returning immediately, but without a useful return value.

This current solution is a bit inflexible, though. Our operation will always run on the ThreadPool all the time, which may not be what we want, for example when we need to update UI elements at certain points. Of course we could capture the UI context when we construct the function, but that would start getting a bit convoluted.

Let's split out moving an operation to the ThreadPool from our doBusy function (on the side we will also introduce a default busy message that should be correct in a lot of cases and that we can replace when we really need to):

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: 29: |

|

If we look at the type of the operation argument of doBusyAsync, it has changed from unit -> unit in doBusyBackground to Async<unit>, that means it has to come "pre-wrapped" in an async { } block. That is necessary so we can decide what should go on the ThreadPool and what we want to run on the UI thread (or implement any other threading requirements we may have).

Another thing to note is that we call operation without parentheses now - because it is not a normal F# function anymore, but an asynchronous workflow. "Awaiting" that with do! or let! (which have the same effect, with the difference that let! binds the result to a value we can then keep using, while do!.... doesn't) results it its execution, yielding the 'T value of the Async<'T>. To be exact, nothing inside the async { } blocks actually gets executed until Async.StartImmediate (or one of a number of other Async functions like Async.RunSynchronously or Async.StartWithContinuations) is called. Everything up to that is just setting up the computation.

Our new onThreadPool function is a little more generic than running on the ThreadPool in doBusyBackground was: operation is not a function of type unit -> unit, but also an Async<'a>, which means it returns a value of type 'a (which can still be unit, of course). That means we can not only run "fire-and-forget" style code on the ThreadPool, but we can actually get results back. That sounds like a useful property for our code to have.

It is especially useful when we consider something that should start to become clear when looking at our existing functions that use asynchronous workflows: They are highly composable. We can nest them and chain them, await them without hassle using let! and then use the result as a normal F# value. Did I mention we can make any piece of code "asynchronous" by putting async { } around it?

If we put together what we have now, we can run an operation on a background thread while our application is "busy" as follows:

1: 2: 3: |

|

When operationFunction completes, the "wrapper" asynchronous workflow created by onThreadPool returns the unit value to doBusyAsync, which does nothing with it, but sets busy.IsBusy to false and thus signals the application is idle again.

Now, as you may have noticed, all of our doBusy implementations so far set busy.ProgressPercentage to None, because we have no way of way of knowing our actual progress - at least not in doBusy. The actual operation that we run may very well have that knowledge. How do we make use of it?

Let's take our nonsensical "long-running operation" from the start of the post and turn it into a function.

1: 2: 3: 4: 5: 6: 7: |

|

We know the total number of items in the list, and we can find a point in our processing where each individual item is processed, so in addition to the processing, we can also report a progress percentage.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: |

|

In the simplest case, the implementation of reportProgressPercentage will just be something like fun percentage -> busy.ProgressPercentage <- Some percentage.

Well, that probably kind of works, but rather looks like handicraft work (German: Gebastel, read: "gabustel"). Especially that List.mapi usage (that we need to be able to tell how many items we have already processed) can't even really be called a workaround anymore. It's just clueless gebastel.

Another issue is that this way we would report our progress for every single item we process - if there are a lot of items (and if there weren't, we wouldn't need a computer to process them), that would mean using significant resources just to update the progress display with a frequency the human eye cannot possibly perceive - probably many thousands of times per second.

Before we look at a better solution, let's consider something I mentioned at the very beginning: parrale.... pallellara.... running something on multiple cores instead of just one.

Using the FSharp.Collections.ParallelSeq library, which provides an F# friendly API for Parallel LINQ, we can write the above as follows:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: |

|

Depending on the number of cores available and how well the work to be done is parallelizable, that can provide a massive performance boost (obviously, because that is kind of the point of having multiple cores in the first place). What happens here is that our input data will be split up into several parts, and the parts will be processed in parallel threads, each on its own core. Again depending on what our data and the actual processing look like, there is no way to tell how fast which thread is processing items, so until PSeq.map returns, we never actually know our progress. The only way to get at that would be counting processed items over all threads - and the way we do that is using Interlocked.Increment.

Of course it isn't, but you believed that for a few clock cycles, right?

What we will really be using is a nice facility that F# offers us called MailboxProcessor, F#'s own lightweight implementation of the Actor model. In a very small nutshell, an "actor" is a.... thing that has a queue of messages (its own private one that nobody else can access), processes any incoming messages sequentially (that means it doesn't care about any new messages until it's done with the currently processed one) and generally can only be communicated with through messages sent to its queue.

("MailboxProcessor" is a somewhat odd and unclear name; it is a common habit among F# programmers to alias the type to Agent.)

A very nice property is that we do not have to care about it threading-wise - as long as we only send it messages and don't immediately expect an answer, it is detached from the threads that we have to handle and care about. It achieves that by also using asynchronous workflows and non-blocking waiting for incoming messages using let!, as we've seen before. The functionality of a MailboxProcessor is usually implemented using a tail recursive function that calls itself with the computed state from processing the current message to then wait for the next message.

What we will let our Agent/Actor do is "collect" the progress updates from all the different threads our parallel sequence uses (that we don't even know about ourselves) and update our IBusy in a sensible interval, let's say every half second - that's more than fast enough for a user to get a good understanding of the actual progress.

What we need to tell the MailboxProcessor's processing function for that is the total nuber of items to process - so we can calculate a percentage - and the update interval.

When receiving a message, it will add the number of items reported to the current count, then check if the time passed since last settingbusy.ProgressPercentage is greater than the update interval, and if so, update the progress value. (We will assume for this that the implementation of IBusy will take care of updating the value that's actually bound to the UI on the appropriate thread; otherwise we would need to do that in the MailboxProcessor, which we could, but don't really want to do.)

For what we want to do, our processing logic doesn't need to know about the actual MailboxProcessor, so we will just give it a function of type int -> unit to call, which the MailboxProcessor already provides with its Post() method.

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: 21: 22: 23: 24: 25: 26: 27: 28: |

|

Now again let's use all the things we've built together:

1: 2: 3: 4: 5: |

|

I think that ended up looking pretty tidy; didn't it?

processDataThingsParallel will be run on the ThreadPool and process its items using Parallel LINQ, while we have a "busy" display and get progress updates all the time. And when it's done, the "busy" display will disappear, and the application can be used again.

Remember that all our doBusy implementations set busy.ProgressPercentage to None, so by default, we should have an "indeterminate progress" display, and in case our processing logic actually reports progress, it will then be set to Some p, and we'll have a value to show on screen.

One issue I realized while writing this is that handling reportProgress like this isn't ideal, because it's easy to think "hey, that's a function to use for reporting progress, so now that I have that, I'll use it whenever I have progress to report", but in reality, it is parameterized for exactly this one usage (especially because we have already given it the total number of items), and it has the current count as a piece of internal state that can't be reset. That means it is necessary semantically to create a new reportProgress function every time we want an operation to report its progress, but there is nothing that forces us to do so. So that part of our API leaves room for improvement.

A small note on the side - I always went with the concept of the "busy" display locking the application from user interaction; that of course doesn't have to be. It could just as well simply be a small progress bar in a tool bar or status bar that just shows up and disappears as needed. In that case we'd probably have two different implementations of IBusy - one that actually prevents the user from doing things, and one that doesn't.

Although this has taken some time now, it is actually a rather simple solution (but probably sufficient much of the time) based on something I use in practice. This concept could be expanded upon in various ways, including things like

Shoutouts to Reid Evans, whose idea the doBusy concept was and who even turned it into a busy { } computation expression (and who incidentally is my F# advent calendar date-mate today), and to Tomas Petricek, who validated the idea of the onThreadPool function.

Written on my HP ZBook with Ionide and Visual Studio Code